Intro

This book is on driver development using Rust. You get to procedurally write a UART driver for a RISCV chip called ESP32C3 and a Qemu-riscv-virt board.

If you have no idea what a UART driver is, then you have nothing to worry about. You'll get a hang of it along the way.

This is NOT a book on embedded-development but it will touch up on some embedded-development concepts here and there.

To learn about Rust on embedded, you are better off reading The Embedded Rust Book.

Book phases, topics and general flow.

The book will take you through 5 phases :

Phase 1:

Under phase 1, you get to build a UART driver for a qemu-riscv virtual board. This will be our first phase because it will take you through the fundamentals without having to deal with the intricacies of handling a physical board. You won't have to write flashing algorithms that suite specific hardware. You won't have to read hardware schematics.

The code here will be suited for a general virtual environment.

The resultant UART driver at the end of this phase will NOT be multi_thread-safe.

Phase 2:

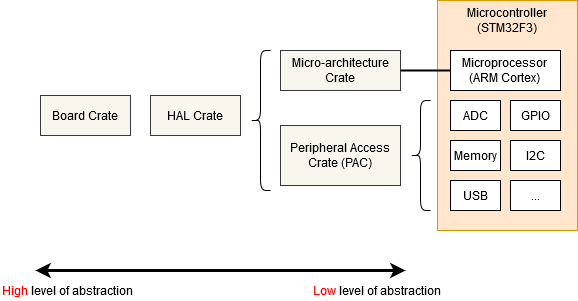

We will try to improve our UART driver code to conform to standard APIs like PAC and HAL. This phase will try to show devs how to make standard drivers that are more portable.

If you have no idea what HAL and PACs are, you hav nothing to worry about. You'll learn about them along the way.

Phase 3:

Both Phase 1 and 2 focus on building a UART driver for a virtual riscv board, BUT phase 3 changes things up and focusses on porting that UART code to a physical board.

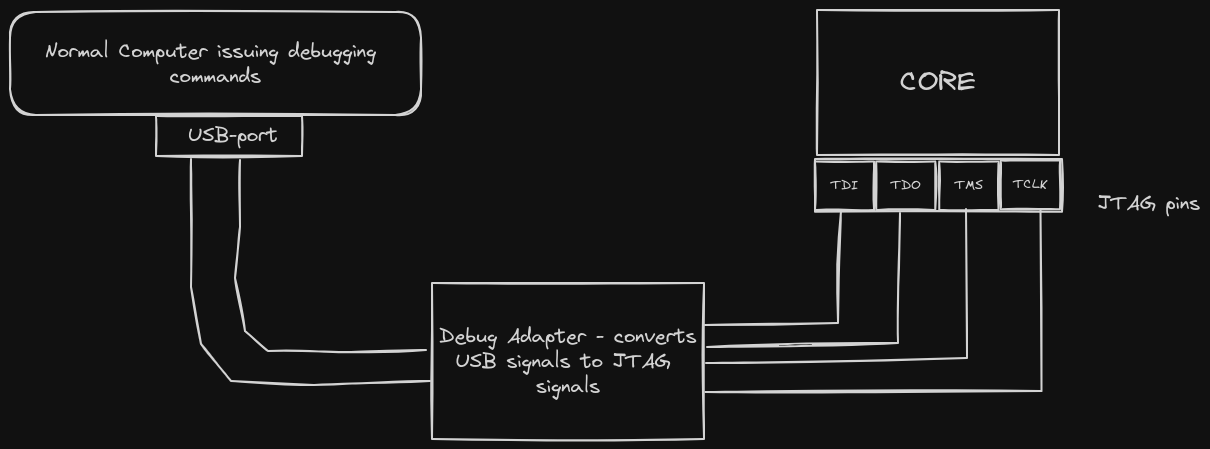

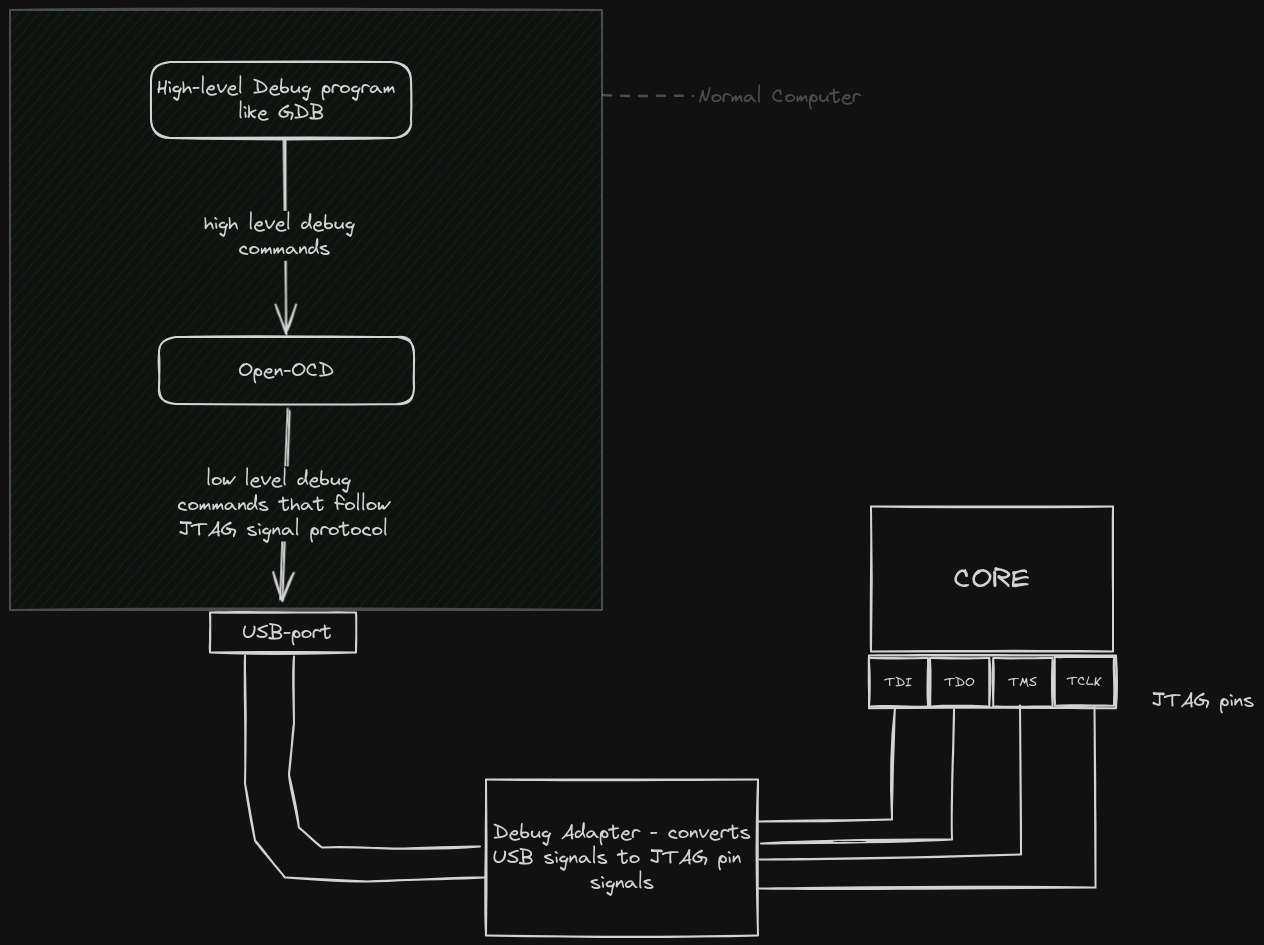

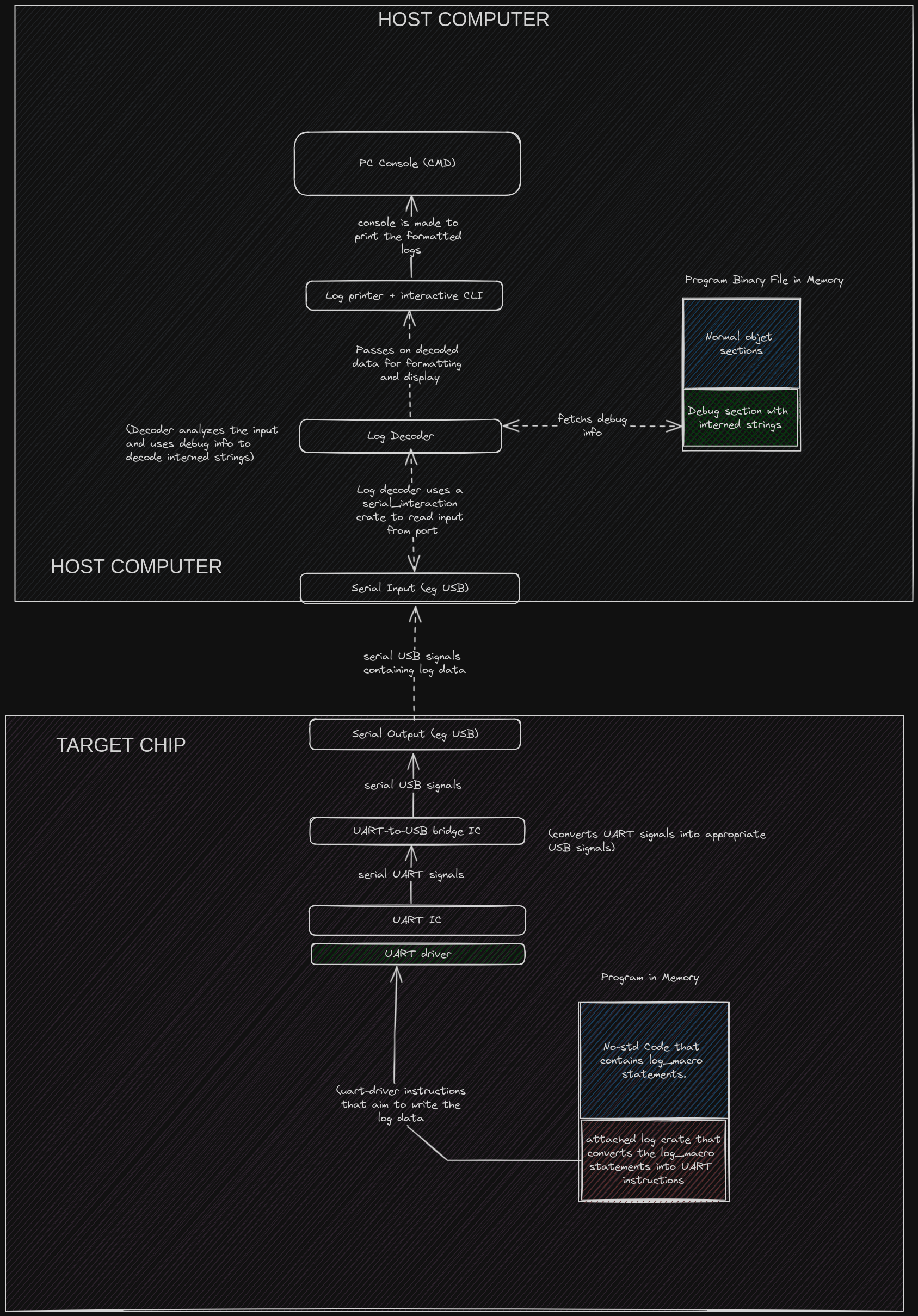

We will modify the previously built UART driver so that it can run on an esp32 physical board. We'll set up code harnesses that assist in flashing, debugging, logging and testing the driver-code on the physical board.

On normal circumstances, people use common pre-made and board-specific tools to do the above processes : ie testing, logging, debugging and flashing.

For example, developers working with Espressif Boards usually use Espressif tools like esptool.py.

We will not use the standard esp-tools but we will use probe-rs, this is because esp-tools abstract away a lot of details that are important for driver-devs to master. Esp-rs tools are just too good to use in our use-case... it would be awesome if we write our own flashing algorithms and build or own logging module. Probe-rs is hack-able and allows one to do such bare activities.

We will however imitate the esp-tools.

The driver produced in this phase will still NOT be multi_thread-safe.

Phase 4:

Under Phase 4, we start making our driver to be multi-thread safe. This will be first done in a qemu virtual environment to reduce the complexity. After we have figured our way out of the virtual threads, we will move on to implementing things on the physical board.

Phase 5:

In phase 5, We'll do some brush-up on driver-security and performance testing.

Why the UART?

The UART driver was chosen because it is simple and hard at the same time. Both a beginner and an experienced folk can learn a lot while writing it.

For example, the beginner can write a minimal UART and concentrate on understanding the basics of driver development; No-std development,linking, flashing, logging, abstracting things in a standard way, interrupt and error-handling...

The pseudo_expert on the other hand can write a fully functional concurrent driver while focusing on things like performance optimization,concurrency and parallelism.

A dev can iteratively work on this one project for a long time while improving on it and still manage to find it challenging on each iteration. You keep on improving.

Moreover, the UART is needed in almost all embedded devices that require some form of I/O; making it a necessary topic for driver developers.

The main aim here is to teach, not to create the supreme UART driver ever seen in the multiverse.

What this book is NOT

This book does not explain driver development for a particular Operating System or Kernel. Be it Tock, RTOS, Windows or linux. This book assumes that you are building a generic driver for a bare-metal execution environment.

Quick links

To access the tutorial book, visit : this link

To access the source-code, visit this repo's sub-folder

This is an open-source book, if you feel like you want to adjust it...feel free to fork it and create a pull-request.

Prerequisites for the Book

The prerequisites are not strict, you can always learn as you go:

-

Computer architecture knowledge : you should have basic knowledge on things like RAM, ROM, CPU cycle, buses... whatever you think architecture is.

-

Rust knowledge : If you've read the Rust book, you should be fine. This doesn't mean that you should have mastered topics like interior mutability, concurrency or macros...these are things that you can learn as you go. Ofcourse, you will need them, but it's better to learn them on the job for the thrill of it.

-

Have an esp32-c3... or any riscv board that you are comfortable with.

-

Have some interest in driver development. Learning without interest is a mental torture. Love yourself.

And finally, have lots of time and patience.

Goodluck.

Intro to Drivers

This chapter is filled with definitions.

And as you all know, there are NO right definitions in the software world. Naming things is hard. Defining things is even harder.

People still debate what 'kernel' means. People are okay using the word 'serverless' apps. What does AI even mean? What is a port? What is a computer even? It's chaos everywhere.

So the definitions used in this book are constrained in the context of this book.

What's a driver? What's firmware?

Drivers and firmwares do so many things. It is an injustice to define them like how I have defined them below.

In truth, the line between drivers and firmware is very thin. (we'll get back to this... just forget that I mentioned it).

But here goes the watered down definitions...

Firmware

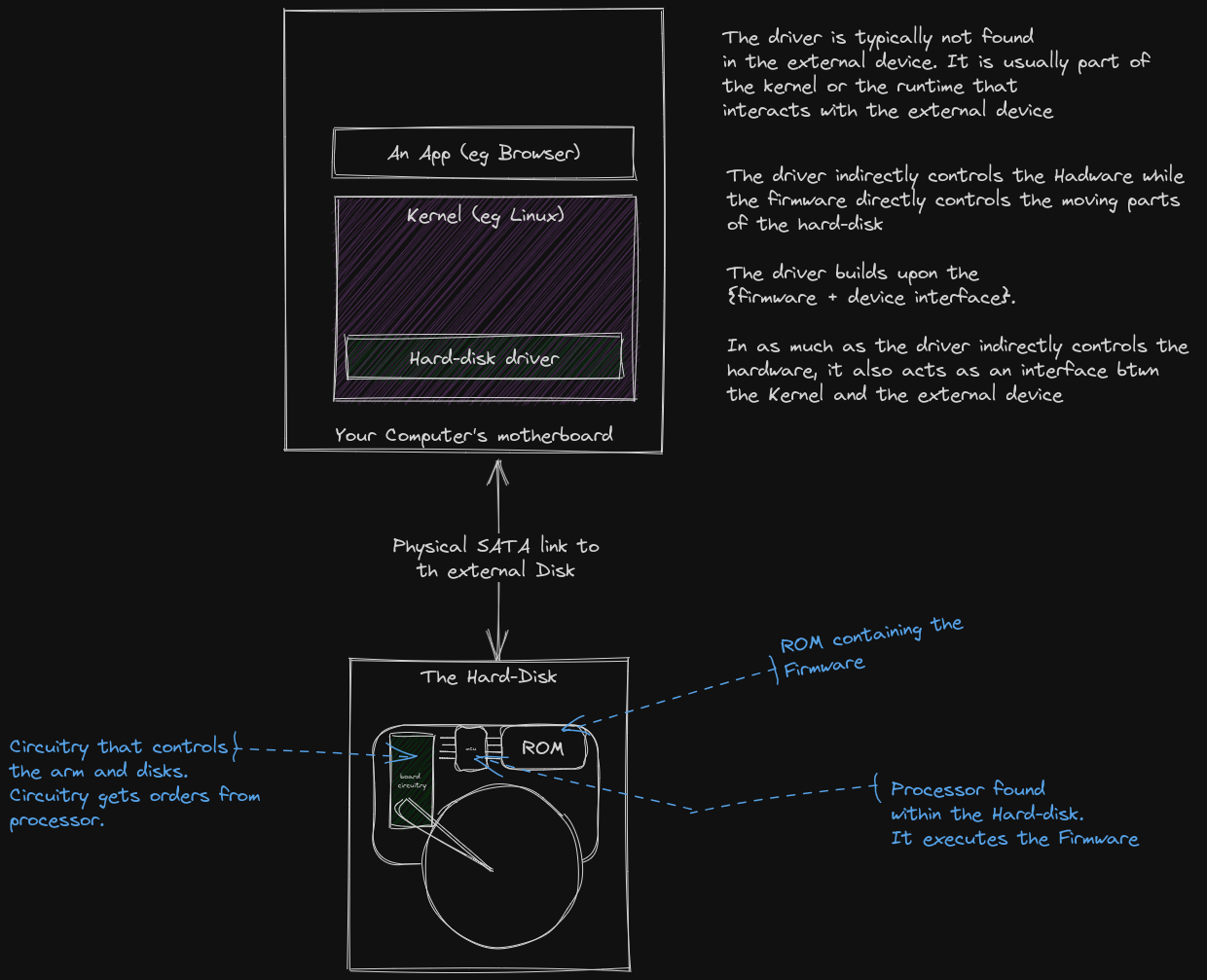

Firmware is software that majorly controls the hardware. It typically gets stored in the ROM of the hardware.

For example, an external hard-disk device has firmware that controls how the disks spin and how the actuator arms of the hard-disk do their thing.

That firmware code is independent of any OS or Runtime... it is code specifically built for that hard-disk's internal components. The 0s and 1s of the firmware are stored in a place where the circuitry of the hard-disk can access and process.

You may find that the motherboard of the hard-disk has a small processor that fetches the firmware code from the embedded ROM amd processes it. See the figure below.

Driver

On the other hand, a Driver is software that also controls the hardware AND provides a higher level interface for something like a kernel or a runtime. The driver is typically stored as part of the kernel.

The above definitions are confusing? Ha?

Here is an image to confuse you further...

A driver typically sits in-between a high-level program and the physical device.

The high level program could be a kernel in this case. And the physical device could be an hard-disk attached to the motherboard.

The driver has 2 primary functions :

- Control the underlying device. (the hard-disk)

- Provide an interface for the kernel/higher-level program to interact with. The interface could contain things like public functions, data_structures and message passing endpoints that somehow manipulate how the driver controls the underlying device...

Here is a Bird's eye-view of the driver-to-firmware ecosystem:

Let's break down the two main roles of the driver in the next chapter...

But before we turn the page, remember when we said that the line between drivers and firmware is thin?

Well... here is an explanation

Role 1 : Controlling the Physical device below

TLDR :

Software controls the hardware below by either Direct Register Programming or Memory Mapped Programming. This can be done using Assembly language, low-level languages like C/Rust, or a mixture of both.

In the previous page, we concluded that BOTH firmware and drivers control hardware.

So, what does controlling hardware mean? How tf is that possible?

What is Hardware??

Hardware in this case is a meaningful combination of many electronic circuits.

A Hard-disk example

For example, a Hard Disk is made up of circuits that store data in form of magnetic pockets, handle data-retrival, handle heat throttling, data encryption... all kinds of magic.

In fact, let's try to make an imaginary-over-simplified DIY hard-disk.

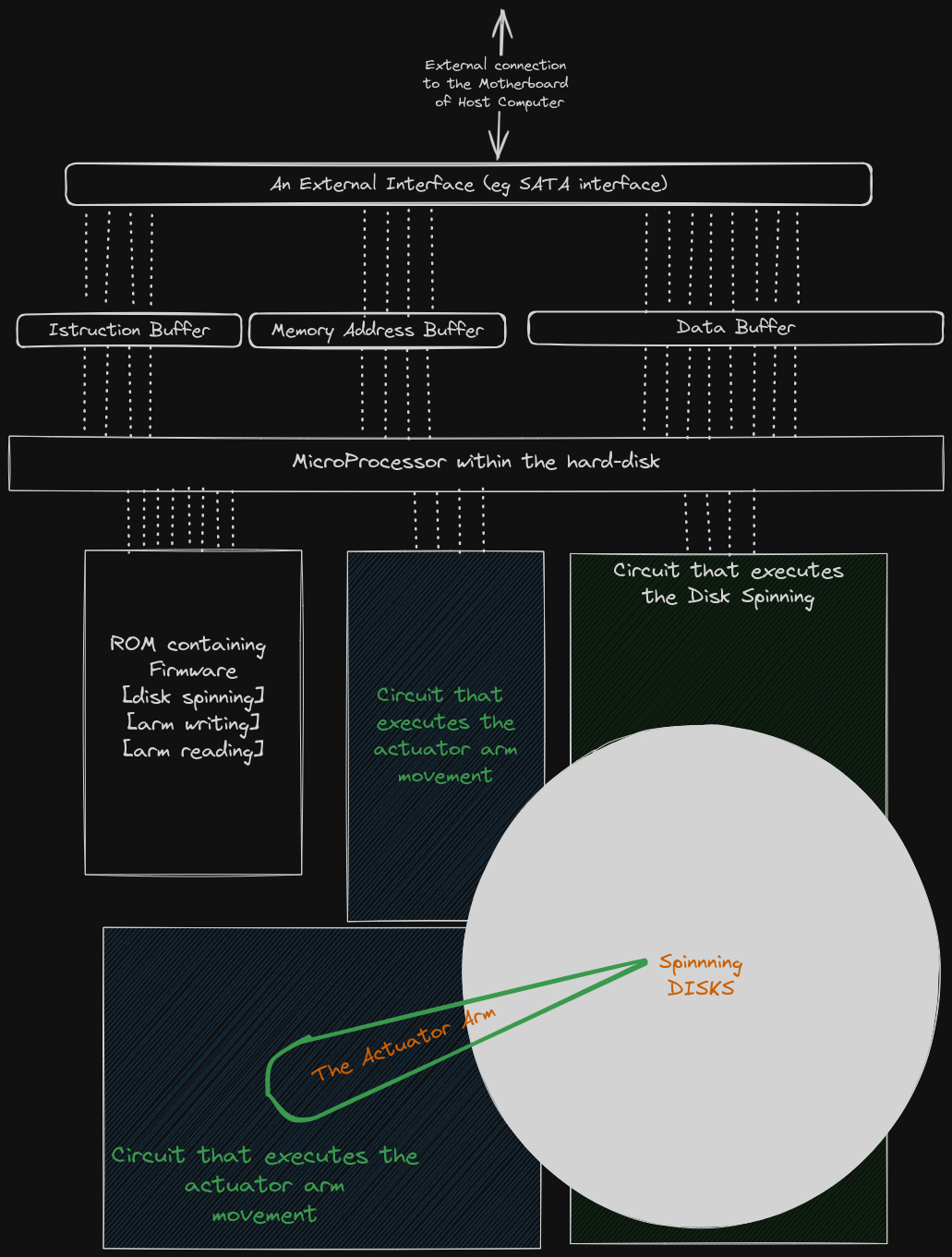

Here's a sketch...

Here is a break-down of the above image.

-

The External interface

The external interface is the physical port that connects the Hard-disk to the host computer.

This interface has 2 functions:- The interface receives an instruction, a memory address and data from the host computer and respectively stores them in the

Instruction Buffer,Memory Address BufferandData Bufferfound within the hard-disk. The acceptable instructions are only two:READ_FROM_ADDRESS_XandWRITE_TO_ADDRESS_X.

TheMemory Address Buffercontains the address in memory where the host needs to either read from or write to.

TheData Buffercontains the data that has either been fetched from the disk or is waiting to written to the disk.

- The interface also receives data from within the hard-disk and transmits them to the Host computer

- The interface receives an instruction, a memory address and data from the host computer and respectively stores them in the

-

The ROM contains the Hard-disk's firmware. The Hard-disk's firmware contains code that ...

- handles heat throttling

- handles the READ and WRITE function of the Actuator Arm

- handles the movement of the Actuator Arm

- handles the spinning speed of the disks

-

A small IC or processor that fetches and executes both the Hard-disk's firmware and the instructions stored in the

Instruction Buffer.The micro-processor continuously does a fetch-execute cycle on both the

Instruction Bufferand the ROM.If the instruction fetched from the

Instruction BufferisREAD_FROM_ADDRESS_X, the processor begins the READ operation.

If the instruction fetched from theInstruction BufferisWRITE_TO_ADDRESS_X, the processor begins the WRITE operation.Steps of the READ operation...

- The processor fetches the target memory address from the

Memory Address Buffer. - The processor fetches & executes the firmware code to spin the disks accordingly in order to facilitate a read from the target address.

- The processor fetches & executes firmware code to move the Actuator Arm to facilitate an actual read from the span disks.

- After the read, the processor puts the fetched data in the

Data Buffer - The External Interface fetches data from the

Data Bufferand transmits it to the Host. - The processor clears the

Instruction Buffer,Memory Address BufferandData Bufferin order to prepare for a fresh read/write operation. - The Read operation ends there.

Steps of the WRITE operation...

- The processor fetches the target memory address from the

Memory Address Buffer. - The processor acquires the data that is meant to be written to the Hard-disk. This data is acquired from the

Data Buffer. - The processor fetches & executes firmware code to spin the disks accordingly in order to facilitate a write to the target address.

- The processor fetches & executes firmware code to move the Actuator Arm to facilitate a write.

- The processor clears the

Instruction Buffer,Memory Address BufferandData Bufferin order to prepare for a fresh read/write operation. - The Write operation ends there.

- The processor fetches the target memory address from the

A manual driver?

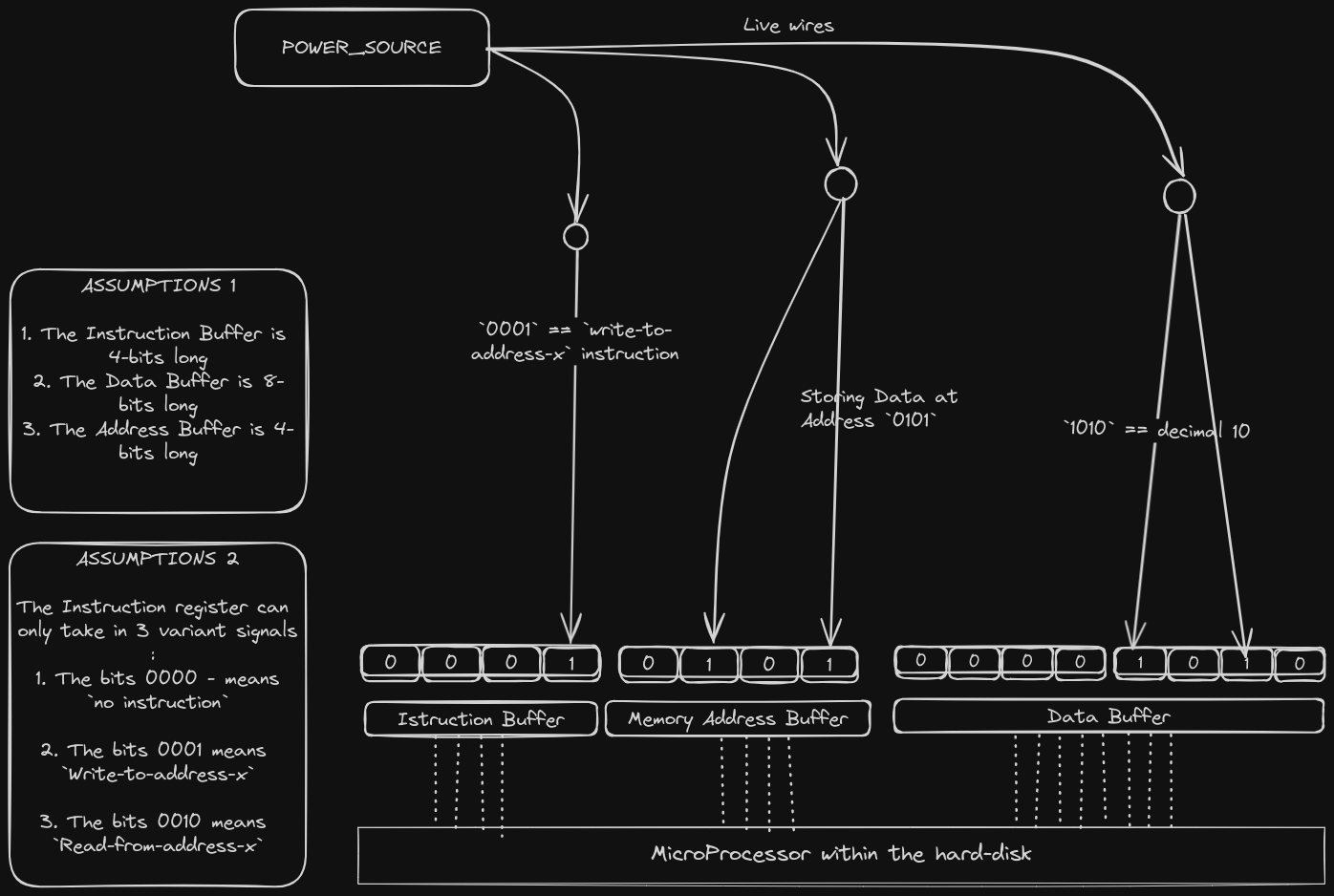

If we were in a zombie apocalypse and we had no access to a computer for us to plug in a hard-drive, how would we have stored data into the hard-disk?

We could store data directly without using a computer with an Operating system that has hard-disk drivers. All we have to do is to supply meaningful electric signals to the external interface of the Hard-disk. You could do this using wires that you collected from the car you just stripped for parts. We are in an apocalypse, remember?

For example, to store the decimal number 10 into the address 0b0101, we could do this...

Strip, the external interface off and access the 3 registers directly: The Data Buffer register, Instruction Buffer register & Memory Address Buffer register.

From there, we could supply the electrical signals as follows...

Of-course, this experiment is very hard to do in real life. But come on, we are in an apocalypse and we have just build ourselves an 8-bit DIY hard-disk. Kindly understand.

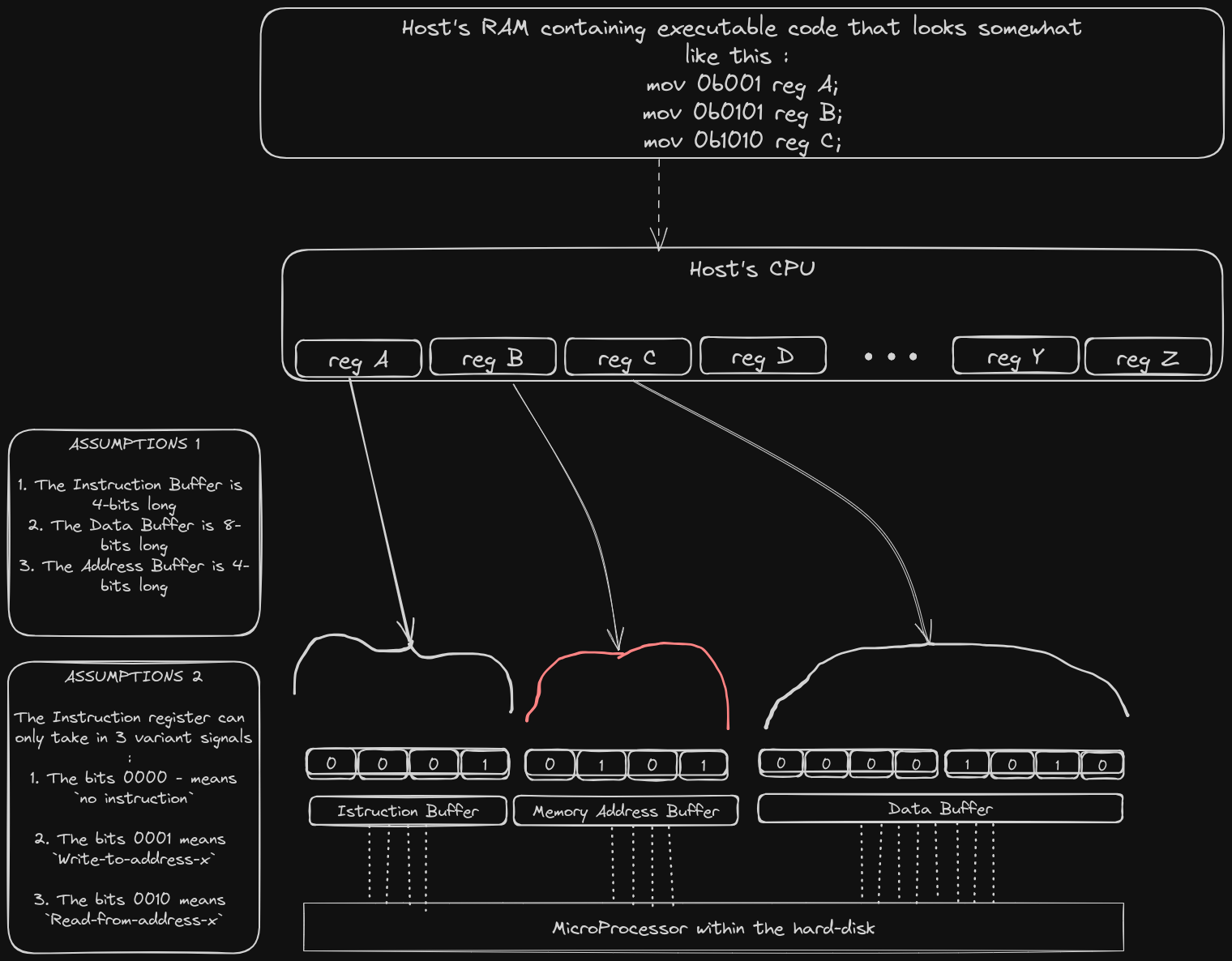

Programming

We are developers, we automate everything... especially when it is unnecessary.

So how do we automate this manual manipulation of Hard-disk registers? How??

Let us imagine that in the middle of the apocalypse, we found a host computer where we can plug in our DIY hard-disk.

Solution 1: Direct Register Programming

Now that we have access to a Host computer with a CPU, we can attach all the 3 registers DIRECTLY to the CPU of the host computer as shown in the figure below.

To control which signals reach the individual bits of the 3 registers, we can write some assembly code to change the values of the native CPU registers. It is our assumption that the electrical signals will find their way to the attached registers... (basic electricity)

This solution gets the job done.

This solution is typically known as Direct register programming. You directly manipulate the values of the CPU registers, which in turn directly transfer the values to the registers of the external device.

Solution 2: Memory Mapped Programming

The CPU has a limited number of registers. For example, the bare minimum RiscV Cpu has only 32 general registers. Any substantial software needs more than 32 registers to store variables. The RAM exists because of this exact reason, it acts as extra storage. In fact the stack of most softwares gets stored in the RAM.

The thing is... registers are not enough.

So instead of directly connecting the Hard-disk registers to the limited CPU registers, we could add the external-device registers to be part of the address space that the CPU can access.

We could then write some assembly code using standard memory access instructions to indirectly manipulate the values of the associated hard-disk registers. This is called Memory-mapped I/O programming (mmio programming).

MMIO maps the registers of peripheral devices (e.g., I/O controllers, network interfaces) into the CPU’s address space. This allows the CPU to communicate with these devices using standard memory access instructions (load/store)

This is the method that we will stick to because it is more practical.

You could however use Direct Register Programming when building things like pace-makers, nanobots or some divine machine that is highly specialized and requires very little indirection when fetching or writing data to addresses.

This is because dedicated registers typically perform better than RAMS and ROMS in terms of access-time.

Summary

The driver controls the hardware below by either Direct Register Programming or Memory Mapped Programming. This can be done in Assembly, low-level languages like C/Rust, or a mixture of both.

Role 2: Providing an Interface

The driver acts as an interface between the physical device and the kernel.

In this case, the 'physical device' is inclusive of its internal firmware.

The Driver abstracts the device as a simplified API.

We will learn about HALs and PACs in future chapters. You can ignore them for now.

{this is an undone chapter. Abstraction is an art. The author is still trying to find his rhythm.}

{For devs with stable styles, you can edit this page.}

Types of Drivers

Classifications and fancy words do not matter, we go straight to the list of driver-types.

Drivers are classified according to :

- How close the driver is to the metal.

- What the function of the driver is.

Drivers classified by their level of abstraction.

Like earlier mentioned, drivers are abstractions on top of devices. And it is well known that abstractions exist in levels; you build higher-level abstractions on top of lower level abstractions.

Here are the two general levels in the driver-world.

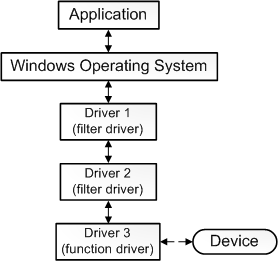

-

Function drivers : these drivers implement functions that directly manipulate the external device's registers. You could say that these drivers are the OG drivers. They are at the lowest-level of abstraction. They are one with the device, they are one with the metal.

-

Filter drivers/ Processing drivers/ Wrapper drivers: These drivers take input from the function drivers and process them into palatable input and functions for the kernel. They can be seen as 'adapters' between the function-driver and the kernel. They can be used to implement additional security features. Point being, their main function is wrapping the function-driver.

Oh look... this 👇🏻 is what we were talking about... thanks windows for your docs.

This image was borrowed from the Windows Driver development docs

A driver stack is a collection of different drivers that work together to achieve a common goal.

Drivers classified by function

This classification is as straightfoward as it seems. eg Drivers that deal with hard-disks and SSDs are classified as storage drivers. More examples are provided below:

- storage drivers : eg ssd drivers

- Input Device Drivers

- Network Drivers

- Virtual drivers (Emulators)

- This list can be as long as one can imagine... but I hope you get the drift

IMO, classification is subjective, a driver can span across multiple classifications.

Bare Metal Programming

Bare Metal Programming !!!!!!!!!!🥳🥳🥳🥳

Welcome to the first cool chapter.

Bare metal programming is the act of writing code that can run on silicon without any fancy dependencies such as a kernel.

This chapter is important because both Firmware and Drivers are typically Bare-metal programs themselves.

This chapter takes you through the process of writing a program that does not depend on the standard library; A program that can be loaded and ran on any board with a processor and some bit of memory... be it an arduino, an esp, a pregnancy-test-device or a custom board that you manufactured in your bedroom.

Here are 2 alternative resources :

Philipp Oppermann's blog covered Bare-metal programming very well. Philipp's blog covers the topic across two chapters, you can read them here 👇🏽:

- Chapter 1 : A Freestanding Rust Binary

- Chapter 2 : A Minimal Rust Kernel

The Embedonomicon gives you a more detailed and systematic experience.

Two cents : Start with the Blog and then move on to the Embedonomicon.

Machine code

From your Computer architecture class, you learnt that the processor is a bunch of gates cramped up together in a meaningful way. A circuit of some sort.

You also learnt that each processor implements an ISA (Instruction Set Architecture).

As long as you can compile high level code into machine code that is within the bounds of the ISA, that CPU will gladly execute your code. You don't even have to follow a certain ABI in order for that code to run.

The main point here is that : "Machine code that follows a certain ISA can run on any processor that implements that ISA." This is because the processor is a DIRECT implementation of the ISA specifications.

So a home-made processor that you built in your room can run Rust code as long as that rust code gets compiled into machine code that follows the ISA specifications of your custom processor.

Dependencies

A dependency refers to a specific software component or library that a project relies on to function properly.

For example, the hello-world program below uses the time library as a dependency:

use time; fn main(){ println!("Hello world!!!"); let wait_time : Duration = time::Duration::from_seconds(5); thread::sleep(wait_time); }

Default dependencies

By default, all rust programs use the std library as a dependency. Even if you write a simple hello-world or an add-library, the contents of the std::prelude library get included as part of your code as if you had written it as follows...

use std::{self, prelude::*}; // this line is usually not there... but theoretically, // your compiler treats your code as if this line was explicitly declared here fn main(){ println!("Hello world!!!"); }

So ... what is the standard library? What is a prelude?

The Standard Library

The standard library is a library like any other... it is just that it contains definitions of things that help in very essential tasks. Tasks that are expected to be found in almost every OS.

For example, it may contain declarations & definitions of file-handling functions, thread-handling functions, String struct definition, ... etc

You can find the documentation of the rust standard library here.

Below is a story that explains more about standard libraries (disclaimer: the story does not even explain the actual modules of the standard library).

Story time

You can skip this page if you already understand ...

- What the standard library is

- Why it exists

- The different standards that may be followed

System Interface Standards

Long ago ... once upon a time (in the 70s-80s), there were a lot of upcoming operating systems. Each operating system had its's own features. For example, some had graphical interfaces, some didn't. Some could run apps using multi-threading capabilities, others didn't. Some had file systems that contained like 100 functions... others had like 10 file-handling functions.

It was chaos everywhere. For example : the open_file() function might have had different names across many operting systems. So if you wrote an app for 3 OSes, you would have re-written your code 3 times just because the open_file function was not universal.

It was a bad time to be an application developer. You either had to specialize in writing apps for one operating system OR sacrifice your sanity and learn the system functions of multiple Operating systems.

To make matters worse... the individual operating systems were improving FAST, it was a period when there were operating system wars... each new weekend introduced breaking changes in the OS API...so function names were changing, file_handling routines were changing, graphical output commands were changing. CHAOS! EVERYWHERE.

So developers decided that they needed some form of decorum for the sake of their sanity.

They decided to create common rules and definitions on the three topics below :

- Basic definitions of words used in both kernel and application development

- System interface definition

- Shell and utilities.

So what the hell are these three things?

1. Basic definitions

Just as the title says, before the devs made rules, they had to first know that they were speaking the same language. I mean... how can you make rules about something that you don't even have a description for?

They had to define the meaning of words. Eg "What is a process? What is an integer? What is a file? What is a kernel even?

Defining things explicitly reduced confusion.

They had to ...

- Agree on the definition of things ie terminology.

- Agree on the exact representation of data-types and their behavior. This representation does not have to be the same as the ABI that you are using, you just have to make sure that your kernel interface treats data-types as defined here.

- Agree on the common constants : For example error_codes and port numbers of interest ...

2. System Interface

As earlier mentioned, each kernel had different features and capabilities... some had dozens of advanced and uniquely named file-handling functions while others had like 2 poorly named and unique file-handling functions.

This was a problem. It forced devs to have to re-write apps for each OS.

So the devs sat down and created a list of well-named function signatures... and declared that kernel developers should implement kernels that us those exact signatures. They also explicitly defined the purpose of each of those functions. eg

void _exit(int status); # A function that terminates a process

You can find the full description of the _exit function under POSIX.1-2017 and see how explicit the definitions were.

This ensured that all kernels, no matter how different, had a similar interface. Now devs did not need to re-write apps for each OS. They no longer had to learn the interfaces of each OS. They just had to learn ONE interface only.

These set of functions became known as the System interface.

You can view the POSIX system interface here

3. Shell and its utilities

The Operating system is more than just a kernel. You need the command line. You may even need a Graphic User Interface like a Desktop.

In the 1980's, shells were the hit. So there were dozens of unique shells, each with their own commands and syntax.

The devs sat down and declared the common commands that had to be implemented or availed by all shells eg ls, mv, grep, cd...

As for the shell syntax... well... I don't know... the devs tried to write a formal syntax. It somehow worked, but people still introduced their own variations. Humanity does not really have a universal shell syntax.

(which is good, bash syntax is horrifying... the author took years to get good at Rust/JS/C/C++, but they're sure they'll take their whole life to get comfortable with bash. Nushell to the rescue.)

There are a few standards that cover the above 3 specifications. Some of them are:

- POSIX standard

- WASI (WebAssembly System Interface)

- Windows API (WinAPI)

Entry of the standard library

Why is this 'System Interface Standards' story relevant?

Well... because the functions found in the Rust standard library usually call Operating system functions in the background(i.e POSIX-like functions). In other words, the source-code for the standard library may call POSIX-system functions in the background.

POSIX compliance

If you look at the list of system functions specified by posix, you might get a heart-attack. That list is so Long!!.

What if I just wanted to create a small-specialized kernel that does not have a file-system or thread-management? Do I still have to define file-handling functions? Do I still have to define thread-management functions? - NO!, that would be a waste of everyone's time and RAM.

So we end up having kernels that define only a subset of the posix system interfaces. So Kernel A may be more Posix-compliant than Kernel B just because kernel A implements more system interfaces than B... it is up to the developers to know which level of tolerance they are fine with.

The level of tolerance is sometimes called Posix Compliance level. I find that name limiting, I prefer 'level of tolerance'.

C example

Read about The C standard library and its relation to System interfaces from this wikipedia page.

Bare-metal

So by now you understand what the standard library is.

You understand why it exists.

You somehow understand its modules and functions.

You understand that the standard library references and calls system functions in its source code. The Std assumes that the underlying operating system will provide the implementations of those system functions.

These system function definitions can be found in files found somewhere in the OS.

You understand that the interface definition of a standard library is 'constant'. ( i.e it is standardized, versionized and consistent across different platforms)

You understand that the implementation of a standard library is NOT constant because It is OS-dependent. For example the interfaces of the libc library is constant across all OSes but libc's implementations is different across all OSes; In fact the libc implementations have different names ... we have glibc for GNU-Linux's libc and CRT for windows' libc. GNU-linux even has extra implementations such as musl-libc. Windows has another alternative implementation called MinGW-w64

No-std

Most rust programs depend on the standard library by default, including that simple 'hello world' you once wrote. The standard library on the other hand is dependent on the underlying operaring system or execution environment.

For example, the body of std::println! contains lines that call OS-defined functions that deal with Input and output eg write() and read().

Drivers provide an interface for the OS to use, meaning that the OS depends on drivers... as a result, you have to write the driver code without the help of the OS-dependent Standard Library. This paragraph sounds like a riddle ha ha... but you get the point... to write a driver, you have to forget about help from the typical std library. That std library depends on your driver code... the std library depends on you.

When software does not depend on the standard library, it is said to be a bare-metal program. It can just be loaded to the memory of a chip and the physical processor will execute it as it is.

Bare metal programming is the art of writing code that assumes zero or almost-no hosted-environment. A hosted environment typically provides a language runtime + a system interface like POSIX.

We will procedurally create a bare metal program in the next few sub-chapters.

Execution Environments

An Execution environment is the context where a program runs. It encompasses all the resources needed to make a program run.

For example, if you build a video-game-plugin, then that plugin's execution environment is that video-game.

In general software development, the word execution-environment usually refers to the combination of the processor-architecture, the kernel, available system libraries, environment variables, and other dependencies needed to make apps run.

Here is more jargon that you can keep at the back of your head:

The processor itself is an execution environment.

If you write a bare-metal program that is not dependent on any OS or runtime, you could say that the processor is the only execution environment that you are targeting.

The kernel is also an execution environment. So if you write a program that depends on the availability of a kernel, you could say that your program has two exeution environments; The Kernel and the Processor.

The Browser is also an execution environment. If you write a JS program, then your program has 3 execution environments: The Browser, the kernel and the Processor.

I hope you get the drift, the systems underneath any sotware you write is part of the execution environment.

Chips and boards are mostly made from silicon and fibreglass. Metal forms a small percentage(dopants, connections).

I guess we should saybare-silicon programminginstead ofbare-metal programming?

Disabling the Standard Library

A earlier mentioned, when you write drivers, you cannot use the standard library. But you can use the core-library.

So what is this core library? (also known as lib-core).

Even before we discuss what the core library entails, lets answer this first:

How is it possible that the core library can get used as a dependency by bare-metal apps while the std library cannot get used as a dependency? How are we able to use the core library on bare metal?

well...Lib-core functions can be directly compiled to pure assembly and machine code without having to depend on pre-compiled OS-system binary files. Lib-core is dependency-free.

Lib-core is lean. It is a subset of the std library. This means that you lose a lot of functionalities if you decide to use lib-core ONLY.

Losing the std library's support means you forget about OS-supported functions like thread management, handling the file system, heap memory allocation, the network, random numbers, standard output, or any other features requiring OS abstractions or specific hardware. If you need them, you have to implement them yourself. The table below summarizes what you lose...

| feature | no_std | std |

|---|---|---|

| heap (dynamic memory) | * | ✓ |

| collections (Vec, BTreeMap, etc) | ** | ✓ |

| stack overflow protection | ✘ | ✓ |

| init code before main | ✘ | ✓ |

| libstd available | ✘ | ✓ |

| libcore available | ✓ | ✓ |

* Only if you use the alloc crate and use a suitable allocator like alloc-cortex-m.

** Only if you use the collections crate and configure a global default allocator.

** HashMap and HashSet are not available due to a lack of a secure random number generator.

You can find lib-core's documentation here

You can find the standard library's documentation here

The Core library

The Rust Core Library is the dependency-free foundation of The Rust Standard Library.

That is such a fancy statement... let us break it down.

Core is a library like any other. Meaning that your code can depend on it. You can find its documnetation at this page

What does the Core library contain? What does it do?

- Core contains the definitions and implementations of primitive types like

i32,char,booletc. So you need the core library if you are going to use primitives in your code. - Core contains the declaration and definitions of basic macros like

assertandassert_eq. - Core contains modules that provide basic functionalities. For example, the

arraymodule provides you with methods that will help you in manipulating an array primitive.

What does the core library lack that std has?

Core lacks libraries that depend on OS-system files and OS-level services.

For example, core lacks the following modules that are found in the std library ... mostly because the modules deal with OS-level functionalities.

std::threadmodule. Threading is a service that is typically provided by a kernel.std::envmodule. This module provides you with ways to Inspect and manipulate a process’ environment. Processes are usually an abstration provided by an OS.std::backtracestd::boxedstd::osstd::string

Look for the rest of the missing modules and try to answer the following questions :

- "why isn't this module not found in core?",

- "if it were to be implemented in core, how would the module interface look like?".

The above 2 questions are hard.

In the past, the experimental core::io did not exist, but now it does because the above two questions were answered(partially). It is still an ongoing answer.

Something to note, just because a module's name is found in both std and core, it is not a guarantee that both the modules contain identical contents. Modules with the same names have different contents.

For example, core::panic exposes ZERO functions while std::panic exposes around 9 functions.

Is the Core really dependency free?

A dependency-free library is a library that does not depend on any other external library or file. It is a library that is complete just by itself.

The core library is NOT fully dependency free. It just depends on VERY FEW external definitions.

The compiled core code typically contains undefined linker symbols. It is up to the programmer to provide extra libraries that contain the definitions of those undefined symbols.

So there you go... Core is not 100% dependency-free.

The undefined symbols include :

- Six Memory routine symbols :

memcopy,memmove,memset,memcmp,bcmp,strlen. - Two Panic symbols:

rust_begin_panic,eh_personality

What are these symbols?

We will discuss the above symbols in the next 2 sub-chapters

Panic Symbols

Disclaimer: The author is not completely versed with the internals of panic. Improvement contributions are highly welcome, you can edit this page however you wish.

The core library requires the following panic symbols to be defined :

rust_begin_paniceh_personality

Before we discuss why those symbols are needed, we need to understand how panicking happens in Rust.

To understand panicking, please read this chapter from the rust-dev-guide book.

It would also be nice to have a copy of the Rust source-code so that you can peek into the internals of both core::panic and std::panic.

But before, you read those resources... let me try to explain panicking.

Understanding panic from the ground up.

Panics can occur explicitly or implicitly. If this statement does not make sense, read this Rust-book-chapter.

We will deal with explicit panics for the sake of uniformity and simplicity. Explicit panics are invoked by the panic!() macro.

When the Rust language runtime encounters a panic! macro during program execution, it immediately documents it. It documents this info internally by instantiating a struct called Location. location stores the path-name of the file containing panic!(), the faulty line and the exact column of the 'source token parser'.

Here is the struct definition of Location:

#![allow(unused)] fn main() { pub struct Location<'a> { file: &'a str, line: u32, col: u32, } }

The Rust-runtime then looks for the panic message that will add more info about the panic. Most of the time, that message is declared by the programmer like shown below. Sometimes no message is provided.

#![allow(unused)] fn main() { let x = 10; panic!("panic message has been provided"); panic!(); /*panic message has NOT been provided*/ }

The runtime then takes the message and the location and consolidates them by putting them as fields in a new instance of struct PanicInfo. Here is the internal description of the PanicInfo :

#![allow(unused)] fn main() { pub struct PanicInfo<'a> { payload: &'a (dyn Any + Send), message: Option<&'a fmt::Arguments<'a>>, // here is the panic message, as you can see...it is optional location: &'a Location<'a>, // here is the location field, it is not optional can_unwind: bool, force_no_backtrace: bool, } }

Now that the rust-runtime has an instance of PanicInfo, it moves on to either one of these two steps depending on the circumstaces:

- It passes the

PanicInfoto the function tagged by a#[panic_handler]attribute - It passes the

PanicInfoto the function that has been set byset_hookortake_hook

If you are in a no-std environment, option 1 is taken.

If you are in a std-environment, option 2 is taken.

The #[panic_handler] attribute and panic hook

The panic_handler is an attribute that tags the function that gets called after PanicInfo has been instantiated AND before the start of either stack unwinding or program abortion.

The above ☝🏾 statement is heavy, let me explain.

When a panic happens, the aim of the sane programmer is to :

- capture the panic message and panic location (file, line, column).

- maybe print the message to the

stderror to some external display - Recover the program to a stable state using mechanisms like

unwrap_or_else - Terminate the program safely if the panic is unrecoverable.

Step 1

The Runtime automatically does for you step one by creating PanicInfo.

Step 2:

It is then up to the programmer to define a #[panic_handler] function that consumes the PanicInfo and implement step 2.

You can do something like this:

#![allow(unused)] fn main() { #[panic_handler] fn my_custom_panic_handler (_info: &PanicInfo) -> !{ println!("panic message: {}", _info.message()); // print message to stdout println!("panic location: file: {}, line: {}". info.location.file(), info.location.line()); loop{} } }

If you are in an std environment, implementing step 2 is optional. This is because the std library already defines a default function called panic_hook that prints the panic message and location to the stdout.

This means that if you define a #[panic_handler] function in an std environment, you will get a duplication compilation error. A program can only have one #[panic_handler].

The only way to define a new custom panic_hook in an std environment is to use the set_hook function.

Step 3 & 4:

Both the panic_hook and the #[panic_handler] function transfer control to a code block called panic-runtime. There are two kinds of panic-runtimes provided by rust.

- Panic-unwind runtime

- panic-abort runtime

The programmer has the option of choosing one of the two options before compilation. In a normal std environment, panic-unwind runtime is usually the default.

So what are these?

panic-abort is a code block that causes the panicked program to immediately terminate. It leaves the stack occupied in hope that the kernel will take over and clear the stack. panic-abort does not care about safe program termination.

panic-unwind runtime on the other hand cares about recovery, it will free the stack frame by frame while looking for a function that can act as a recoveror. On worst case, it will not find a reovery function and it will just safely terminate the program by clearing the stack and releasing used memory.

So if you want to recover from panics, your bet would be to choose the panic-unwind runtime as your runtime of choice.

So ... How is recovery implemented?

Panic Recovery

As earlier mentioned, when a panic-worthy line of code gets executed, the language runtime itself creates an instance of Location and Message and eventually creates an instance of PanicInfo or PanicHookInfo. That is why you as the programmer have no way to construct your own instance of PanicInfo. It is something created at runtime by the language runtime.

The language runtime then passes a reference of the PanicInfo to the #[panic_handler] or panic_hook.

The panic hook and #[panic_handler] do their thing and eventually call either of the 2 panic runtimes.

Hope we are on the same page till there.

Now that we are on the same page, we need to introduce some new terms...

catch_unwind and unwinding-handlers

The aim of the panic-unwind runtime is to achieve the following:

- deallocate the stack upwards, frame by frame.

- For every frame deallocated, it records that info as part of the Backtrace.

- It continuously hopes that it will eventually meet an

unwinding-handlerfunction frame that will make it stop the unwinding process

If the panic-unwind finally meets a handler, it stops unwinding and transfers control to the Handler. It also hands over the PanicInfo to that handler function.

So what are handler functions?

Handler functions are functions that have the ability to stop the unwinding, consume the PanicInfo created by a panic and do some recovery magic.

These handlers come in different forms. One of these forms is the catch_unwind function.

catch_unwind is a function provided by std that acts as a "bomb container" for a funtion that may panic.

This function takes in a closure that may panic and then runs that closure as an inner child. If the closure panics, catch_unwind() returns Err(panic_message). If the closure fails to panic, catch_unwind returns an Ok(value).

Below is a demo of a catch_unwind function in use:

#![allow(unused)] fn main() { use std::panic; let result = panic::catch_unwind(|| { println!("hello!"); }); assert!(result.is_ok()); let result = panic::catch_unwind(|| { panic!("oh no!"); }); assert!(result.is_err()) }

catch-unwind is not the only unwinding-handler, there are other implicit and explicit handlers. For example, Rust implicitly sets handlers that encompass each thread by default such that if a thread panics, it will unwind its stack till it meets either an explicit internal handler OR it eventually meets the implicit thread_handler inserted by the compiler during thread instantiation. The recoery mechanism implemented by rust in such a case is to return Result(_) to the parent thread.

What does it mean to catch a panic?

Catching a panic means preventing a program from completely terminating after a panic by either implicitly or explicitly adding handlers within your code.

You can add implicit handlers by enclosing dangerous functions in isolated threads and count on the language runtime to insert unwinding-handlers for you.

You can add an explicit handler by pasing a dangerous functions as an argument to a catch_unwind function

Unwind Safety

UnwindSafe is a marker trait that indicates whether a type is safe to use after a panic has occurred and the stack has unwound. It ensures that types do not leave the program in an inconsistent or undefined state after a panic, thus helping maintain safety in Rust's panic recovery mechanisms.

(undone: limited knowledge by initial author, contribution needed)

>My knowledge on unwind-safety ends there.

Any contributor can focus on showing the UnwindSafe, RefUnwindSafe and AssertUnwindSafe markers in action. You can even show where they fail (thank you in advance)

That's all concerning panic recovery, go figure out the rest.

Panic_impl and Symbols

During the compilation process, both the #[panic_handler] and panic_hook usually get converted into a language item called panic_impl.

In Rust, "language items" are special functions, types, and traits that the Rust compiler needs to know about in order to implement certain fundamental language features. These items are usually defined in the standard library (std) or the core library (core) and are marked with the #[lang = "..."] attribute.

Think of language items as 'tokens that affect the functionality of the compiler'.

Below is the signature of panic_impl, I hope you can see the direct similarity btwn #[panic_handler] and panic_impl

#![allow(unused)] fn main() { extern "Rust" { #[lang = "panic_impl"] fn panic_impl(pi: &PanicInfo<'_>) -> !; } }

The reason this conversion takes place is because the language designers wanted to introduce indirection for the sake of making std::panic to have the ability to override core::panic during compilation.

As the compilation levels go further, panic_impl gets compiled into the symbol rust_begin_panic. In the final binary file, the panic_impl symbol is absent.

I guess now you understand what the core library demands from you when it says that you need to provide a definition of the rust_begin_panic symbol.

eh_personality

As for the eh_personality symbol, is not really a symbol. It is a languge item.

The eh_personality language item marks a function that is used for implementing stack unwinding. By default, Rust uses unwinding to run the destructors of all live stack variables in case of a panic. This ensures that all used memory is freed and allows the parent thread to catch the panic and continue execution. Unwinding, however, is a complicated process and requires some OS-specific libraries (e.g. libunwind on Linux or structured exception handling on Windows)

If you choose to use the panic-unwind runtime, then you must define the unwinding function and tag it as the eh_personality language item

Memory Symbols

The memory symbols required by the core library are six:

memcopy,memmove,memset,memcmp,bcmp,strlen.

Rust codegen backends generate code that usually reference the above six functions.

It is up to the programmer to provide definitions of the above six functions. You need to provide them in a file containing the assembly code or machine code for your specific processor.

The definitions of memcpy, memmove, memset, memcmp, bcmp, and strlen are listed as requirements because they represent commonly used memory manipulation and string operation functions that are expected to be available for use by generated code in certain contexts. While they are not strictly required for every Rust program, they are often relied upon by code generated by Rust's compiler, especially in situations where low-level memory manipulation or string operations are necessary.

Here are some reasons why these definitions are listed as requirements:

-

Interoperability with C Code: Rust often needs to interoperate with existing C libraries or codebases. These C libraries commonly use functions like memcpy, memset, and strlen. Therefore, ensuring that Rust code can call these functions or be called from C code requires that their definitions are available.

-

Compiler Optimizations: Even if a Rust program doesn't explicitly call these functions, the Rust compiler may internally use them as part of optimization passes. For example, when optimizing memory accesses or string manipulations in Rust code, the compiler may choose to use these functions or their equivalents to generate more efficient machine code.

Disclaimer: The author is not completely versed with the internals of panic. Improvement contributions are highly welcome, you can edit this page however you wish. (undone)

here is a snippet from core documentation in concern of memory symbols:

memcpy,memmove,memset,memcmp,bcmp,strlen- These are core memory routines which are generated by Rust codegen backends. Additionally, this library(core lib) can make explicit calls tostrlen. Their signatures are the same as found in C, but there are extra assumptions about their semantics: Formemcpy,memmove,memset,memcmp, andbcmp, if thenparameter is 0, the function is assumed to not be UB, even if the pointers are NULL or dangling. (Note that making extra assumptions about these functions is common among compilers: clang and GCC do the same.) These functions are often provided by the system libc, but can also be provided by the compiler-builtins crate. Note that the library does not guarantee that it will always make these assumptions, so Rust user code directly calling the C functions should follow the C specification! The advice for Rust user code is to call the functions provided by this library instead (such asptr::copy).

Practicals

Now that you know a little bit about the core library, we can start writing programs that depend on core instead of std.

This chapter will take you through the process of writing a no-std program.

We will try our very best to do things in a procedural manner...step by step... handling each error slowly.

If you do not wish to go through these practicals(1,2 & 3) in a stepwise fashion, you can find the complete no-std template here

Step 1: Disabling the Std library

Go to your terminal and create a new empty project :

cargo new no_std_template --bin

Navigate to the src/main.rs file and open it.

By default, rust programs depend on the standard library. To disable this dependence, you add the '#[no_std]` attribute to your code. The no-std attribute removes the standard lobrary from the crate's scope.

#![no_std] // added the no-std attribute at macro level (ie an attribute that affects the whole crate) fn main(){ println!("Hello world!!"); }

If you build this code, you get 3 compilation errors.

- error 1: "cannot find macro

printlnin this scope" - error 2: "

#[panic_handler]function required, but not found" - error 3: "unwinding panics are not supported without std"

You can run this code by pressing the play button found at the top-right corner of the code block above. Or you can just write it yourself and run it on your local machine.

Step 2: Fixing the first Error

The error that we are attempting to fix is...

# ... snipped out some lines here ...

error: cannot find macro `println` in this scope

--> src/main.rs:3:5

|

3 | println!("Hello, world!");

| ^^^^^^^

# ... snipped out some lines here ...

The println! macro is part of the std library. So when we removed the std library from our crate's scope using the #![no_std] attribute, we effectively made the std::println macro to also go out of scope.

To fix the first error, we either...

- Stop using

std::printlnin our code - Define our own custom

println - Bring

stdlibrary back into scope.(Doing this will go against the main aim of this chapter; to write a no-std program)

We cannot choose option 3 because the aim of this chapter is to get rid of any dependence on the std library.

We could choose option 2 but implementing our own println will be cost us unnecessary hardwork. Right now we just want to get our no-std code compiling... For the sake of simplicity, we will not choose option 2. We will however write our own println in a later chapter.

So we choose the first option, we choose to comment out the line that uses the proverbial println.

This has been demonstrated below.

#![no_std] fn main(){ // println!("Hello world!!"); // we comment out this line. println is indeed undefined }

Only two compilation errors remain...

Fixing the second and third compilation errors

This is going to be a short fix but with a lot of theory behind it.

To solve it, we have to understand the core library requirements first.

The core library functions and definitions can get compiled for any target, provided that the target provides definitions of certain linker symbols. The symbols needed are :

- memcpy, memmove, memset, memcmp, bcmp, strlen.

- rust_begin_panic

- rust_eh_personality (this is not a symbol, it is actually a language item)

In other words, you can write whatever you want for any supported ISA, as long as you link files that contain the definitions of the above symbols.

1. memcpy, memmove, memset, memcmp, bcmp and strlen symbols

These are all symbols that point to memory routines.

You need to provide to the linker the ISA code that implements the above routines.

When you compile Rust code for a specific target architecture (ISA - Instruction Set Architecture), the Rust compiler needs to know how to generate machine code compatible with that architecture. For many common architectures, such as x86, ARM, or MIPS, the Rust toolchain already includes pre-defined implementations of these memory routines. Therefore, if your target architecture is one of these supported ones, you don't need to worry about providing these definitions yourself.

However, if you're targeting a custom architecture or an architecture that isn't directly supported by the Rust toolchain, you'll need to provide implementations for these memory routines. This ensures that the generated machine code will correctly interact with memory according to the specifics of your architecture.

2. the rust_begin_panic symbol

This symbol is used by Rust's panic mechanism, which is invoked when unrecoverable errors occur during program execution. Implementing this symbol allows the generated code to handle panics correctly.

You could say that THIS symbol references the function that the Rust runtime calls whenever a panic happens.

This means that you have to...

- Define a function that acts as the overall panic handler.

- Put that function in a file

- Link that file with your driver code when compiling.

For the sake of ergonomics, the cool rust developers provided a 'panic-handler' attribute that you can attach to a divergent function. You do not have to do all the linking vodoo. This has been demonstrated later on... do not worry if this statement did not make sense.

You can also revisit the subchapter on panic symbols to get a clear relationship between the rust_begin_panic symbol and the #[panic_handler] attribute.

3. The rust_eh_personality

When a panic happens, the rust runtime starts unwinding the stack so that it can free the memory of the affected stack variables. This unwinding also ensures that the parent thread catches the panic and maybe deal with it.

Unwinding is awesome... but complicated to implement without the help of the std library. Coughs in soy-dev.

The rust_eh_personality is not a linker symbol. It is a language item that points to code that defines how the rust runtime behaves if a panic happens : "does it unwind the stack? How does it unwind the stack? Or does it just refuse to unwind the stack and instead just end program execution?

To set this language behaviour, we are faced with two solutions :

- Tell rust that it should not unwind the stack and instead, it should just abort the entire program.

- Tell rust that it should unwind the stack... and then offer it a pointer to a function definition that clearly implements the unwinding process. (we are soy-devs, this option is completely and utterly off the table!!)

Step 3: Fixing the second compiler error

The remaining errors were ...

error: `#[panic_handler]` function required, but not found

error: language item required, but not found: `eh_personality`

|

= note: this can occur when a binary crate with `#![no_std]` is compiled for a target where `eh_personality` is defined in the standard library

= help: you may be able to compile for a target that doesn't need `eh_personality`, specify a target with `--target` or in `.cargo/config`

error: could not compile `playground` (bin "playground") due to 2 previous errors

This is our second error...

error: `#[panic_handler]` function required, but not found

This is our third...

error: language item required, but not found: `eh_personality`

Just like you guessed, the second error occured because the 'rust_begin_panic symbol' has not been defined. We solve this by pinning a '#[panic_handler]' attribute on a divergent function that takes 'panicInfo' as its input. This has been demonstrated below. A divergent function is a function that never returns.

#![no_std] use core::panic::PanicInfo; #[panic_handler] // you can name this function any name...it does not matter. eg the_coolest_name_in_the_world // The function takes in a reference to the panic Info. // Kid, go read the docs in core::panic module. It's short & sweet. You will revisit it a couple of times though fn default_panic_handler(_info: &PanicInfo) -> !{ loop { // function does nothing for now, but this is where you write your magic // // This is where you typically call an exception handler, or call code that logs the error or panic messages before aborting the program // The function never returns, this is an endless loop... The panic_handler is a divergent function } } fn main(){ // println!("Hello world!!"); }

Would you look at that... if you compile this program, you'll notice that the second compilation error is gone!!!

Step 4: Fixing the Third Error

The third error states that the 'eh_personality' language item is missing.

It is missing because we have not declared it anywhere... we haven't even defined a stack unwinding function. So we just configure our program to never unwind the stack, that way... defining the 'eh_personality' becomes optional.

We do this by adding the following lines in the cargo.toml file :

# this is the cargo.toml file

[package]

name = "driver_code"

version = "0.1.0"

edition = "2021"

[profile.release]

panic = "abort" # if the program panics, just abort. Do not try to unwind the stack

[profile.dev]

panic = "abort" # if the program panics, just abort. Do not try to unwind the stack

Now ... drum-roll... time to compile our program without any errors....

But then ... out of no-where, we get a new diferent error ...

error: using `fn main` requires the standard library

|

= help: use `#![no_main]` to bypass the Rust generated entrypoint and declare a platform specific entrypoint yourself, usually with `#[no_mangle]`

Aahh errors... headaches...

But at least it is a new error. 🤌🏼🥹

It's a new error guys!! 🥳💪🏼😎

Practicals : Part 2

At the end of the last sub-chapter, we got the following error :

error: using `fn main` requires the standard library

|

= help: use `#![no_main]` to bypass the Rust generated entrypoint and declare a platform specific entrypoint yourself, usually with `#[no_mangle]`

Before we solve it, we need to cover some theory...

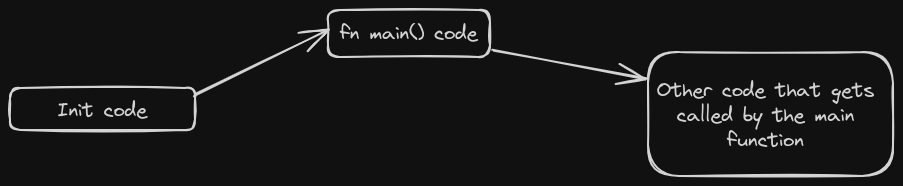

Init code

'init code' is the code that gets called before the 'main()' function gets called. 'Init code' is not a standard name, it is just an informal name that we will use in this book, but I hope you get the meaning here. Init_code is the code that gets executed in preparation for the main function.

To understand 'init code', we need to understand how programs get loaded into memory.

When you start your laptop, the kernel gets loaded into memory.

When you open the message_app in your phone, the message_app gets loaded into memory.

When you open VLC media player on your laptop, the VLC media player gets loaded into memory (The RAM).

To dive deeper into this loading business, let's look at how the kernel gets loaded.

Loading the kernel.

When the power button on a machine(laptop) is pressed, the following events occur. (this is just a summary, you could write an entire book on kernel loading):

-

Power flows into the processor. The processor immediately begins the fetch-execute cycle. Except that the first fetch occurs from the ROM where the firmware is.

-

So in short, the firmware starts getting executed. The firmware performs a power-on-self test.

-

The firmware then makes the CPU to start fetching instructions from the ROM section that contains code for the

primary loader. The primary loader in this case is a program that can copy another program from ROM and paste it in the RAM in an orderly pre-defined way. By orderly way I mean ... it sets up space for the the stack, adds some stack-control code to the RAM(eg stack-protection code), and then loads up the different sections of the program that's getting loaded. If the program has overlays - it loads up the code that implements overlay control too.

Essentially, the loader pastes a program on the RAM in a complete way. -

The

primary loaderloads the Bootloader onto the RAM. -

The

primary loaderthen makes the CPU instruction pointer to point to the RAM section where the Bootloader got pasted. This results in the execution of the bootloader. -

The Bootloader then starts looking for the kernel code. The kernel might be in the hard-disk or even in a bootable usb-flash.

-

The Bootloader then copies the kernel code onto the RAM and makes the CPU pointer to point to the entry point of the kernel. An entry-point is the memory address of the first instruction for any program.

-

From there, the kernel code takes full charge and does what it does best.

-

The kernel can then load the apps that run on top of it... an endless foodchain.

Why are we discussing all these?

To show that programs run ONLY because these two conditions get fulfilled:

-

They were loaded onto either the ROM or the RAM in a COMPLETE way.

The word Complete in this context means that the program code was not copied alone; The program code was copied together with control code segments that deal with things like stack control and overlay-control. The action of copying 'control' code onto the RAM is part of Setting up the environment before program execution starts.In the software world, this control code is typically called runtime code.

-

The CPU's instruction pointer happened to point to the entry point of the already loaded program. An entry-point is the memory address of the first instruction for a certain program.

Loading a Rust Program

From the previous discussion, it became clear that to run a program, you have to do the following :

- load the program to a memory where the CPU can fetch from (typically the RAM or ROM.).

- load the runtime for the program into memory. The runtime in this case means 'control code' that takes care of things like stack-overflow protection.

- make the CPU to point to the entry_point of the (loaded program + loaded runtime)

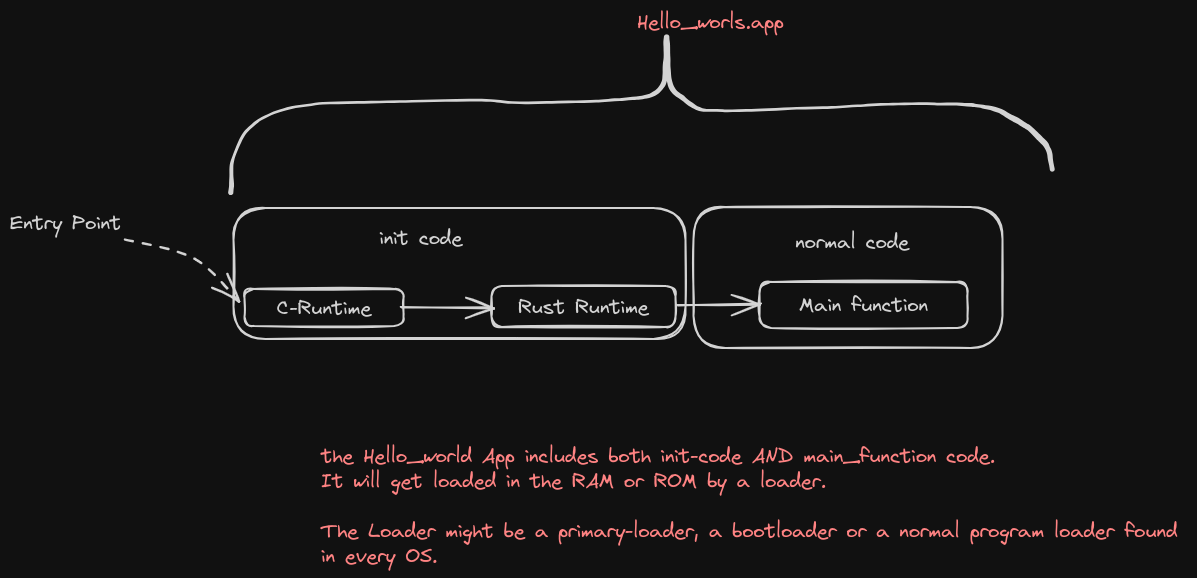

A typical Rust program that depends on the std library is ran in exactly the same way. The runtime code for such programs includes files from both the C-runtime and the Rust Runtime.

When a Rust program gets loaded into memory, it gets loaded together with the C and Rust runtimes.

The normal entry point chain

The normal entry point chain describes the order in which code gets executed and their respective entry-point labels.

In Rust the C-runtime gets executed first, then the Rust runtime and finally the normal code.

The entry_point function of the C-runtime is the function named _start.

After the C runtime does its thing, it transfers control to the Rust-runtime. The entrypoint of the Rust-runtime is labelled as a start language item.

The Rust runtime also does its thing and finally calls the main function found in the normal code.

And that's it! Magic!

During program execution, the instruction pointer occasionally jumps to appropriate Rust runtime sections. For example, during a panic, the instruction pointer will jump to the rust_begin_panic symbol that is part of the Rust runtime.

Understanding Runtimes

To understand exactly what the above two runtimes do, read these two chapters below. They are not perfect, but they are a good start.

In summary, the C-runtime does most of the heavy-lifting, it sets up ...(undone).

The Rust Runtime takes care of some small things such as setting up stack overflow guards or printing a backtrace on panic.

Fixing the Error

To save you some scrolling time, here is the error we are trying to fix.

error: using `fn main` requires the standard library

|

= help: use `#![no_main]` to bypass the Rust generated entrypoint and declare a platform specific entrypoint yourself, usually with `#[no_mangle]`

This error occurs because we have not specified the entrypoint chain of our program.

If we had used the std library, the default entry-point chain could have been chosen automatically ie the entry point could have been assumed to be the '_start' symbol that directly references the C-runtime entrypoint.

To tell the Rust compiler that we don’t want to use the normal entry point chain, we add the #![no_main] attribute. Here's a demo :

#![allow(unused)] #![no_std] #![no_main] // here is the new line. We have added the no_main macro attribute fn main() { use core::panic::PanicInfo; #[panic_handler] fn default_panic_handler(_info: &PanicInfo) -> !{ loop { /* magic goes here */ } } // main function has just been trashed... coz... why not? It's pointless }

But when we compile this, we get a linking error, something like this ...

error: linking with `cc` failed: exit status: 1

|

# some lines have been hidden here for the sake of presentability...

= note: LC_ALL="C" PATH="/home/k/.rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/x86_64-unknown-linux-gnu/bin:/home/k/.cargo/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin" VSLANG="1033" "cc" "-m64" "/tmp/rustcWMxOew/symbols.o" "/home/k/ME/Repos/embedded_tunnel/driver-development-book/driver_code/target/debug/deps/driver_code-4c11dfa3f10db3d0.f20457jvl65bh2w.rcgu.o" "-Wl,--as-needed" "-L" "/home/k/ME/Repos/embedded_tunnel/driver-development-book/driver_code/target/debug/deps" "-L" "/home/k/.rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/x86_64-unknown-linux-gnu/lib" "-Wl,-Bstatic" "/home/k/.rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/x86_64-unknown-linux-gnu/lib/librustc_std_workspace_core-9686387289eaa322.rlib" "/home/k/.rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/x86_64-unknown-linux-gnu/lib/libcore-632ae0f28c5e55ff.rlib" "/home/k/.rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/x86_64-unknown-linux-gnu/lib/libcompiler_builtins-3166674eacfcf914.rlib" "-Wl,-Bdynamic" "-Wl,--eh-frame-hdr" "-Wl,-z,noexecstack" "-L" "/home/k/.rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/x86_64-unknown-linux-gnu/lib" "-o" "/home/k/ME/Repos/embedded_tunnel/driver-development-book/driver_code/target/debug/deps/driver_code-4c11dfa3f10db3d0" "-Wl,--gc-sections" "-pie" "-Wl,-z,relro,-z,now" "-nodefaultlibs"

= note: /usr/bin/ld: /usr/lib/gcc/x86_64-linux-gnu/11/../../../x86_64-linux-gnu/Scrt1.o: in function `_start':

(.text+0x1b): undefined reference to `main'

/usr/bin/ld: (.text+0x21): undefined reference to `__libc_start_main'

collect2: error: ld returned 1 exit status

= note: some `extern` functions couldn't be found; some native libraries may need to be installed or have their path specified

= note: use the `-l` flag to specify native libraries to link

= note: use the `cargo:rustc-link-lib` directive to specify the native libraries to link with Cargo (see https://doc.rust-lang.org/cargo/reference/build-scripts.html#cargorustc-link-libkindname)

This error occurs because the toolchain thinks that we are compiling for our host machine and therefore decides to use the default linker script targeting the host machine... which in this case happens to be a linux-mint machine with a x86_64 CPU.

The reason this is a problem is because that linker script references start files that reference undefined symbols like __libc_start_main and start.

To view the default linker script that gets used, you can follow these steps

To fix this error, we implement one of the following solutions :

- Specify a cargo-build for a triple target that has 'none' in its OS description. eg

riscv32i-unknown-none-elf. This is the easier of the two solutions, and it is the most flexible. - Supply a new linker script that defines our custom entry-point and section layout. If this method is used, the build process will still treat the host's triple-target as the compilation target.

If the above 2 possible solutions made complete sense to you, and you were even able to implement them, just skim through the next few sub-chapters as a way to humor yourself.

If they did not make sense, then you got some reading to do in the next immediate sub-chapters...

Don't worry, we will get to a point where our bare-metal code will run without a hitch... but it's a long way to go.

The next subchapters will be just theory...we'll fix the compiler error soon.

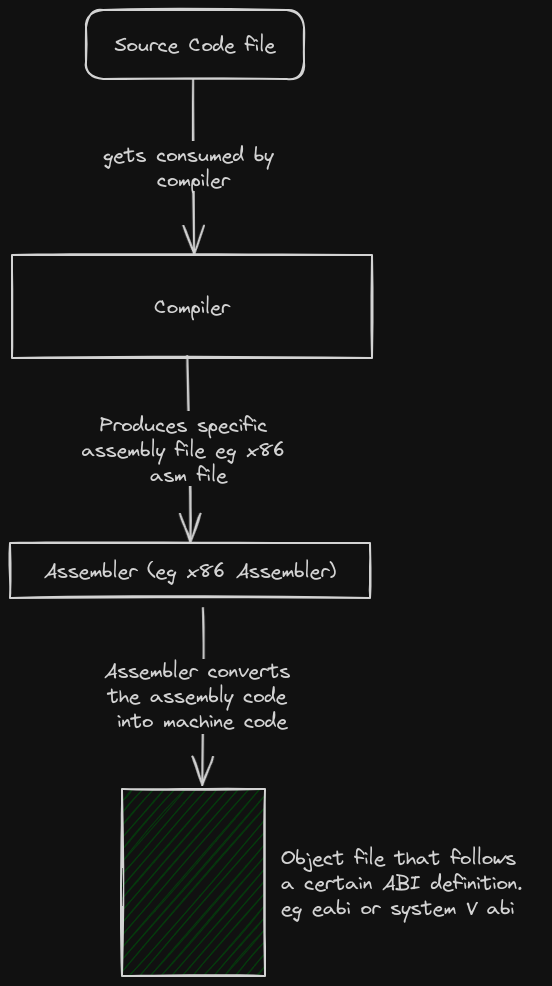

Cross-Compilation

Compilation is the process of converting high level code into low-level code. This typically involves converting source code into machine code. ie. converting text into zeroes-and-ones.

In less typical cases, you can convert high-level code into less-high-level code. eg Rust to LLVM-IR (Intermediate representation). Point is, compilation isn't always about translating source code into binary.

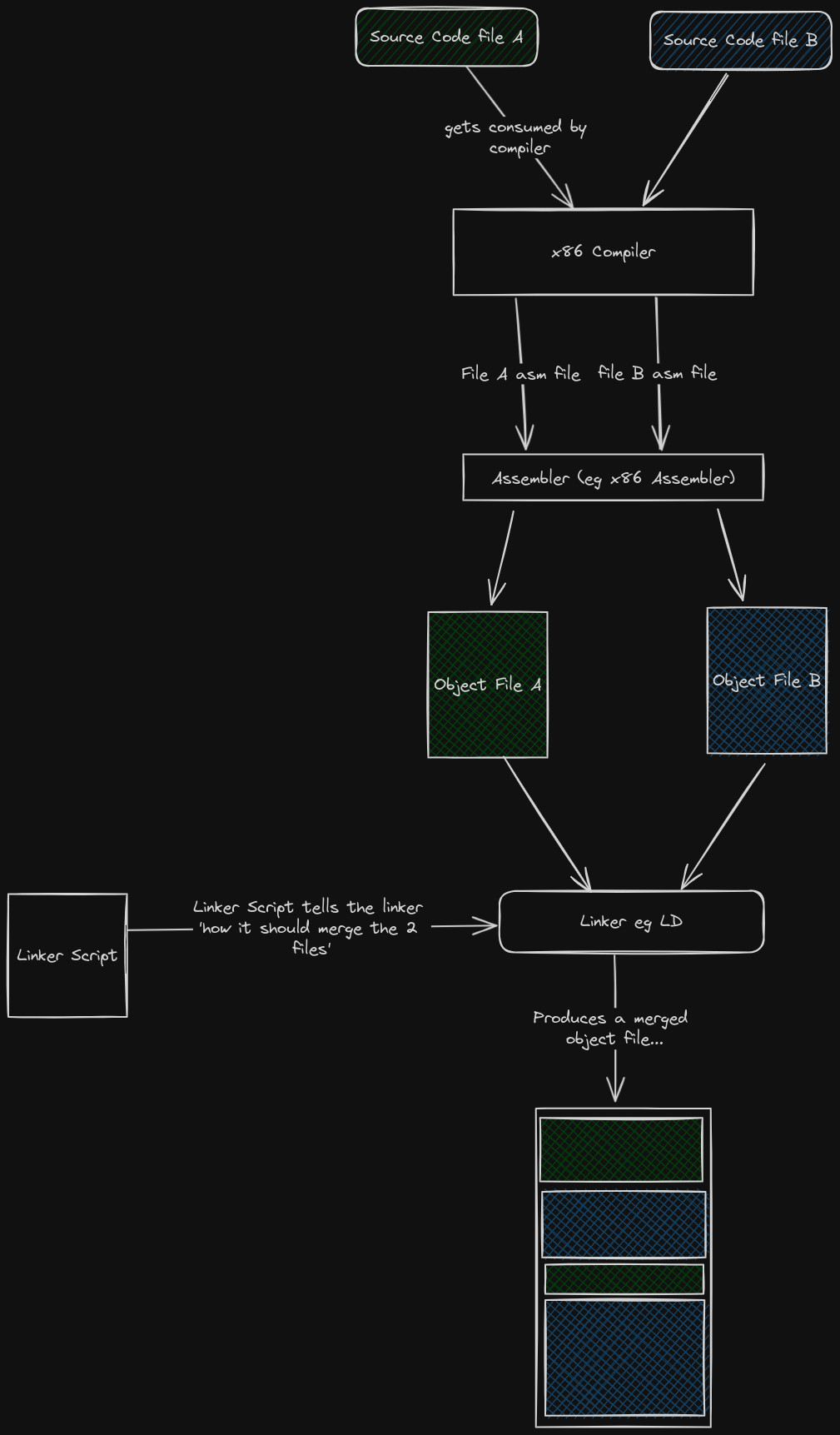

The compilation process for a single file roughly looks like this ...

When multiple files need to get compiled together, the linker gets introduced :

Before we discuss further, make sure you are conversant with the following buzzwords :

- Instruction Set Architecture (ISA)

- Application Binary Interface (ABI)

- Application Programming Interface (API)

- An Execution environment

- The host machine is the machine on which you develop and compile your software.

- The target machine is the machine that runs the compiled sotware. ie the machine that you are compiling for.

Target

If we are compiling program x to run on machine y, then machine y is typically referred to as the Target.

If we compile the hello-world for different targets, we may end up with object files that are completely different from each other in terms of file format and file content.

This is because the format and contents of the object file are majorly affected by the following factors :

-

The CPU Architecture of the target.

Each ISA has its own machine code syntax, semantics and encoding. This means that the add_function may be encoded as001in ISAxand as011in ISAy. So even if the instructions look identical, the object files end up having a different combination of zeroes and ones. -

The Vendor-specific implementations on both the software and hardware of the target machine. (undone: this sentence needs further explanations)

-

The Execution environment on which the compiled program is supposed to run on. In most cases the Execution environment is usually the OS. The execution environment affects the kind of symbols that get used in the object files. For example, a program that relies on the availability of a full-featured POSIX OS will have different symbols than those found in a NON-POSIX OS.

-

The ABI of the execution environment. The structure and content of the object file is almost entirely dependent on the ABI.

To find out how these 4 factors affect the object file, read here.

The above 4 factors were so influential to target files that people started describing targets based on the state of the above 4 factors. For example :

Target x86_64-unknown-linux-gnu means that the target machine contains a x86 CPU, the vendor is unknown and inconsequential, the execution environment is an Operating system called Linux, the execution environment can interact with object files ONLY if they follow the GNU-ABI specification.

Target riscv32-unknown-none-elf means that the target machine contains a Riscv32 CPU, the vendor is unknown and inconsequential, the execution environment is nothing but bare metal, the execution environment can interact with object files ONLY if they follow the elf specification.

People usually call these target specifications triple targets...

Don't let the name fool you, some names contain 2 parameters, others 4 ... others 5. The name Triple-target is a misnomer. Triple-targets don't refer to 3-parameter names alone.

The software world has a naming problem...once you notice it, you see it everywhere. For example, what is a toolchain? Is it a combination of the compiler, linker and assembler? Or do we throw in the debugger? or maybe even the IDE? What is an IDE?? Is a text Editor with plugins an IDE?? You see? Madness everywhere!! Naming things is a big problem.

Why are triple-target definitions important? --> Toolchain Setup

Because they help you in choosing and configuring your compiler, assembler and linker in such a way that allows you to build object files that are compatible with the target.

For example, if you were planning to compile program x for a x86_64-unknown-linux-gnu target....

- You would look for a x86_64 compiler, and install it. A riscv compiler would be useless. An ARM compiler would also be useless.

- You would look for a x86_64 assembler, and install it. Any other assembler would be useless.

- You would then look for system files that were made specifically for the Linux kernel. For example, system files with an implementation of the C standard library such as glibc, newlib and musl.

- You would look for a linker that can process and output GNU-ABI-compliant object files

- You would then write a linker script that references symbols found in the linux-specific system files. That linker script should also outline the layout of an object file that the kernel can load eg Elf-file layout.

- You would then configure all these tools and libraries to work together.

This is a lot of work and stress. Let us call this problem the toolchain-setup problem. This is because the word toolchain typically refers to the combination of tools such as the Compiler, linker, assembler, debugger and object-file manipulation tools.

Rust has a solution to this toolchain-setup problem.

Enter target specification

Rust solves the toolchain-setup problem by providing a compiler feature called target specification. This feature allows you to create object files for any target architeture that you specify. The compiler will automatically take care of choosing a linker, providing a linker script, finding the right system-files and take care of other configurations.

If you had installed your Rust toolchain in the normal fashion... ie. using rustup, then there is a high chance that your compiler has the ability to produce object files for your host-machine's triple-target ONLY.

To see the target-architecture AND triple-target name of your machine, run the following commands :

uname --machine # This outputs your machine's ISA

# this only works for linux machines.

# the author had no idea how to do it in Windows & MacOS. Sorry.

gcc -dumpmachine # This command outputs the triple-target

# only works if you have gcc installed

To see which triple-targets your rust compiler can produce object files for, run the following command :

rustup target list --installed

You can make the compiler to acquire the ability to compile for an new additional triple-target by running the command below :

# rustup target add <new-triple-target-name>

rustup target add riscv32imc-unknown-none-elf

# To see the possible triple-target names that could be used in the command above, run this command

rustup target list

You can then make the compiler to produce an object file for a specific target using the command below :

# cross-compile for any target whose target has already been installed

# The syntax of the command is :

# cargo build <name_of_your_codebase> --target=<name_of_your_triple_target>

cargo build hello-world --target=riscv32imc-unknown-none-elf

Cross-compilation

Cross-compilation is the act of compiling a program for a target machine whose triple-target specification is different from the triple-target specification of the host machine.

We achieve cross-compilation in Rust by using the Target-specification compiler feature discussed above.

Making cross-compilation easier with cargo

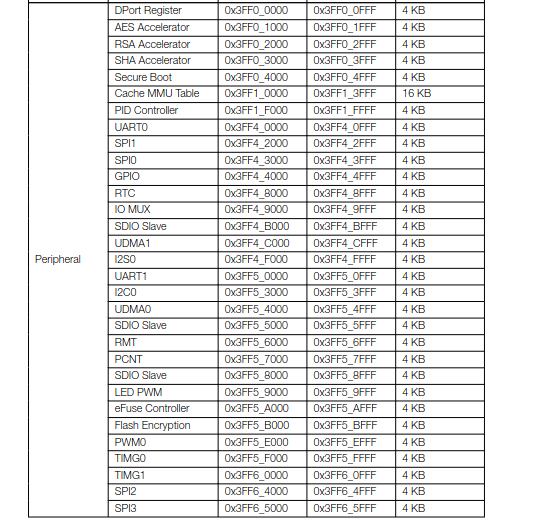

Example case :